Not every page on your website is suited for Google’s index. As a marketer, it is your job to make sure that we communicate your wishes to Google or else Google is going to decide for itself, and that often leaves many an SEO smoking at the ears.

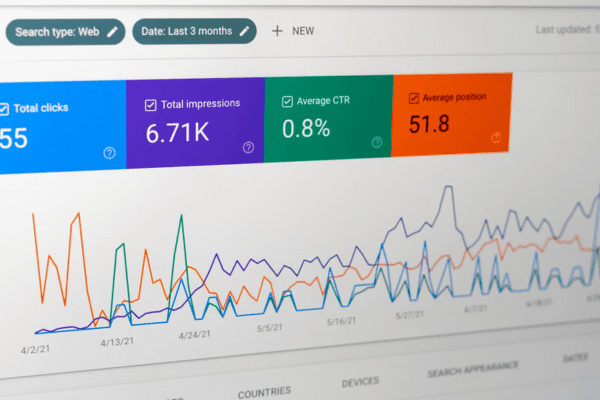

Thankfully by using the index coverage reports in Google Search Console, we can get a clearer picture of how Googlebot is interpreting your site.

There are a lot of different ways that Googlebot can interpret and subsequently classify a page. With over 25 different categories, it gets confusing trying to determine what they all mean.

Here is your complete guide to understanding your Google Index Report.

Valid Pages

Let’s start with the easy ones. Pages with a valid status have been indexed by Google and are good to go. There are two potential categories here:- Submitted and indexed: You submitted the URL for indexing, and it was indexed.

- Indexed, not submitted in sitemap: The URL was discovered by Google and indexed. We recommend submitting all important URLs using a sitemap.

Potential Google Index Errors

- Server error (5xx):

- Redirect error: Google experienced a redirect error of one of the following types: A redirect chain that was too long; a redirect loop; a redirect URL that eventually exceeded the max URL length; there was a bad or empty URL in the redirect chain.

- Submitted URL blocked by robots.txt:

- Submitted URL marked ‘noindex’:

- Submitted URL seems to be a Soft 404:

- Submitted URL returns unauthorized request (401):

- Submitted URL not found (404):

- Submitted URL has crawl issue:

Warning Status Pages

Pages with a warning status might require your attention, and may or may not have been indexed by Google, according to the specific result.Indexed, though blocked by robots.txt:

The page was indexed, despite being blocked by robots.txt. This is marked as a warning because we’re not sure if you intended to block the page from search results. If you do want to block this page, robots.txt is not the correct mechanism to avoid being indexed. To avoid being indexed you should either use ‘noindex’ or prohibit anonymous access to the page using auth. You can use the robots.txt tester to determine which rule is blocking this page. Because of the robots.txt, any snippet shown for the page will probably be sub-optimal. If you do not want to block this page, update your robots.txt file to unblock your page.Excluded Pages

These pages are typically not indexed, and we think that is appropriate. These pages are either duplicates of indexed pages, or blocked from indexing by some mechanism on your site, or otherwise not indexed for a reason that we think is not an error.- Excluded by the ‘noindex’ tag: When Google tried to index the page it encountered a ‘noindex’ directive and therefore did not index it.

- Blocked by page removal tool: The page is currently blocked by a URL removal request. Keep in mind that removal requests are only good for about 90 days after the removal date. After that, Google may revisit and index that page even if you do not submit an index request.

- Blocked by robots.txt: This page was blocked to Googlebot with a robots.txt file. You should double-check that these URLs are meant to be blocked. If you’re diligent with your exclusions, then in all likelihood it’s appropriate to find links on this list.

- Blocked due to unauthorized request (401): Googlebot is blocked from accessing this URL as the page might be password protected

- Crawl anomaly: This means Google experienced an unexpected issue when trying to crawl these URLs. These are quite often a 4XX or 5XX error.

- Crawled – currently not indexed: The page was crawled by Googlebot, but wasn’t submitted to its index. This can be attributed to a number of factors and may still get indexed in the future. This can be the first sign of link bloat.

- Discovered – currently not indexed: The page was found by Google, but not crawled yet. Typically, Google tried to crawl the URL but the site was overloaded; therefore Google had to reschedule the crawl.

- Alternate page with proper canonical tag: This page is a duplicate of a page that Google recognizes as canonical. This is an example of a good exclusion. This tells us that Google is honouring our canonical link.

- Duplicate without user-selected canonical: It appears that the page has duplicates, none of which is marked as the canonical. What this tells us is that the page is missing a canonical link and Google is deciding what to do with this page. This can often lead to good pages being skipped for indexing.

- Duplicate, Google chose different canonical than user: This page is marked as canonical for a set of pages, but Google thinks another URL makes a better canonical. This is not always in the best interest of the webmaster. Google will often confuse closely-related products as the same.

- Not found (404): This URL was submitted to Google to be crawled, but was not available when Googlebot arrived to crawl it.

- Page removed because of legal complaint: The page was removed from the index because of a legal complaint.

- Page with redirect: Google crawled a URL that redirects to a different destination.

- Soft 404:

- Duplicate, submitted URL not selected as canonical: